Real-time ray tracing is at the cutting edge of real-time rendering. Until recently, ray tracing was far too slow to use for real-time applications, but a new line of NVIDIA GPUs is changing all that.

NVIDIA introduced its new Turing line of graphics cards in 2018. These cards have real-time support for ray tracing (RTX). Ray tracing is currently being used to supplement traditional real-time rendering techniques, but progress is being made that will bring more and more real-time ray tracing content to the consumer market.

Bring the skill of a real-time ray tracing rendering company to your CAD rendering projects. Cad Crowd can advise you on how to feature this state-of-the-art technology in your project.

Since the 1990s, 3D real-time rendering has used a process called rasterization. Rasterization uses objects created from a mesh of triangles or polygons. The rendering pipeline converts each triangle into pixels. The pixels are then shaded before being displayed on the screen.

Ray tracing, on the other hand, provides far-more-realistic imagery by simulating the behavior of light. Ray tracing calculates the color of each pixel by following the path a ray of light would take if it were to travel from the eye or a camera and then bounce off multiple objects in a 3D scene.

As it travels around, a ray may reflect from one object to another. It may be blocked, or it might refract through transparent or semi-transparent surfaces. During ray tracing, all of these interactions are calculated and combined to produce the final color of a pixel. This requires large amounts of memory and processing speed but results in highly photorealistic renderings.

NVIDIA’s RTX platform runs on the tech company’s cutting-edge Volta and Turing line of GPUs, and combines ray tracing, deep learning, and rasterization to up the game for real-time rendering.

NVIDIA RTX accelerates and enhances graphics using ray tracing, AI-accelerated features, advanced shaders, simulation, and asset interchange formats. It supports application program interfaces (APIs) for producing cinematic photorealism.

NVIDIA announced support for RTX at its GPU Technology Conference in 2018 with a much-celebrated Star Wars demo. NVIDIA GPUs can now provide the capability for professional-quality, real-time ray tracing on consumer and professional workstations.

Bring the expertise of a real-time ray tracing firm to your real-time rendering products. Cad Crowd can advise you how to incorporate this state-of-the-art technology into your project.

Graphics hardware has rapidly evolved since the first 3Dfx Voodoo 1 card was released in 1996. By 1999, NVIDIA had coined the term graphics processing unit (GPU) with its GeForce 256. Over the next two decades, GPUs developed from static pipeline platforms to highly programmable hardware components that developers can create and apply custom algorithms to.

Nowadays, every computer, tablet, and mobile phone has a built-in graphics processor. That means real-time rendering is continually being improved by the hardware-acceleration technology inside each device. This has resulted in an explosive increase in speed and image quality for real-time rendering applications.

Much of a GPU's chip area is dedicated to shader processors called shader cores. Thousands of programmable shaders have been developed to control GPUs. The development of massively parallel GPUs means processing takes minutes as opposed to the hours required previously.

GPUs can also gain speed from parallelizing tasks. In rasterization jobs, for example, space is allocated to the z-buffer to rapidly access texture images and other buffers, and to find which pixels are covered by a triangle.

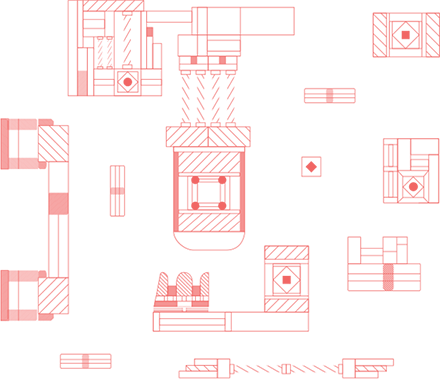

The new RTX-capable GPUs add dedicated ray-tracing acceleration hardware and implement a new rendering pipeline to enable real-time ray tracing. RTX shaders for all the objects in the scene are loaded into memory and stand ready to calculate object intersections.

RTX also uses a new denoising module. If noise is reduced without the need to send out more rays, then the output for ray tracing is faster and better. NVIDIA hasn’t released a lot of information about the Tensor “AI” Cores in their new Turing GPUs, but we do know that they’re much faster at calculating intersections stored in a Bounding Volume Hierarchy (BVH), which is a data structure for representing objects.

In order to develop applications for NVIDIA’s RTX technology, an application program interface (API) is required. An API is an interface used to program graphical user interface (GUI) components.

There are a few APIs available for developers to access NVIDIA RTX. The first is NVIDIA’s own OptiX programming interface. Creators can also access tools for RTX programming through Microsoft’s DirectX Raytracing API (DXR). A third API is Vulkan, a cross-platform graphics standard from the non-profit Khronos Group. All three APIs share a similar approach.

NVIDIA’s own OptiX (OptiX Application Acceleration Engine) API provides developers with “a simple, recursive, and flexible pipeline” for real-time ray tracing. OptiX is used in a number of industries: Films and games, design and scientific visualization, defense applications, and, notably, audio synthesis. The OptiX API works with RTX technology to achieve optimal ray tracing performance on NVIDIA GPUs.

OptiX is a high-level API, which means it processes the entire rendering algorithm, not just the ray-tracing portion. The OptiX engine can execute large hybrid algorithms—for example, rasterization and ray tracing used together—with excellent flexibility.

OptiX has been integrated into several commercial software products, like Autodesk Arnold, Chaos Group V-Ray, Isotropix Clarisse, Optis, Pixar RenderMan, and SolidWorks Visualize, used by SolidWorks freelancers.

The OptiX devkit includes two major components: The ray-tracing engine for renderer development and a post-processing pipeline for image processing. According to NVIDIA, the OptiX AI denoising technology combines with the new NVIDIA Tensor Cores in the Quadro GV100 and Titan V to deliver three times the performance of previous generation GPUs with noiseless fluid interactivity.

OptiX uses a just-in-time (JIT) compiler that constantly analyzes code to optimize speed. The JIT compiler generates customized kernels for developer-generated programs for ray generation, material shading, object intersection, and scene traversal. Compact object models and a ray-tracing compiler map directly to the RTX platform running on NVIDIA’s Volta and Turing GPUs.

OptiX works with NVIDIA’s CUDA toolkit. CUDA is a parallel computing platform and programming model developed by NVIDIA in 2006. It’s customized to work with the company’s CUDA-capable GPUs to achieve optimal graphics performance. A CUDA-capable GPU and the CUDA toolkit are required in order to use OptiX.

For real-time ray tracing with OptiX, developers use CUDA to give instructions about how rays should work in specific situations. For example, a ray will behave differently when hitting granite versus when it hits water. OptiX with CUDA allows developers to customize these hit conditions however they prefer, providing lots of creative flexibility.

In 2014, NVIDIA added a second library to OptiX 3.5 called OptiX Prime. OptiX Prime is a low-level API for the refinement of ray tracing. OptiX Prime doesn’t process the entire rendering algorithm, only the ray tracing part, so it can’t recompile the algorithm for new GPUs, refactor the computation for performance, or use network appliances.

Using the OptiX API for ray tracing is a multi-step process. First, the developer defines the ray-generation program. Will the rays be shot from the "camera" parallel to each other? In a linear perspective? Then, other things that the programmer can define are:

Examples of these programs are available with the OptiX software developer’s kit (SDK). Here’s a summary:

Ray-generation programs: The first or primary ray sent out on a ray path uses the ray-generation program. This user-defined program traces the ray through the scene, writing results from the trace into an output buffer.

Scene-traversal programs: OptiX allows for the efficient culling of parts of the scene not intersected by a given ray. This enables OptiX to hide the complexity of highly optimized, architecture-specific implementations for traversal acceleration.

Intersection and bounding-box programs: A bounding box program calculates bounding boxes for custom primitives such as spheres or curves. This helps the intersection program return true or false based on whether a ray intersects the object.

Closest-hit and any-hit programs: OptiX allows the developer to specify one or more types of rays: For example, radiance, ambient occlusion, or shadow rays. The developer can create a closest-hit and an any-hit program to describe shading behavior when an object is intersected by each ray type.

Any-hit or closest-hit programs can be left unbound; for example, an opaque material may only require a program for shadow rays because finding any intersection between a light and a shading point is sufficient to determine that the point is in shadow.

Miss programs: A miss program is invoked when no object is hit by a ray. It can also be specified for different ray types and can be left unbound. Miss programs can be most helpful when creating environment maps or backgrounds.

OptiX runs on several NVIDIA GPUs, but informal user tests have suggested it performs best on a Volta GPU. With NVIDIA Volta GPUs and RTX ray tracing technology, developers can achieve real-time, cinematic-quality rendering with lifelike lighting and materials in interactive applications.

Microsoft’s DirectX® Ray Tracing (DXR) API was introduced in March 2018. Closely developed alongside NVIDIA, DXR extends DirectX 12 with fully integrated ray tracing. As with NVIDIA’s OptiX, Microsoft’s DXR can handle hybrid algorithms using ray tracing and traditional rasterization techniques. NVIDIA has worked with Microsoft to enable full RTX support for DXR.

Microsoft’s DirectX Raytracing (DXR) API fully integrates ray tracing into DirectX for game developers. At the moment, it’s marketed as supplementary rather than a replacement for rasterization. DXR focuses on enabling this hybrid technique to increase the quality of real-time rendering. As the technology progresses, Microsoft’s goal is to use DXR for true global illumination in real-time rendering applications.

The first step with DXR is to build acceleration structures on two levels. At the bottom level, the application specifies a set of geometries—vertex and index buffers for objects in the world. At the top level, the application specifies a list of instance descriptions. These two levels allow for multiple complex shapes.

The second step is to define the ray-tracing pipeline for shaders and textures. As with OptiX, this allows the developer to specify which shaders and textures should be used when a specific type of ray hits a particular object.

The third step in using DXR is calling DispatchRays. DispatchRays activates the ray generation shader. The shader then calls the TraceRay intrinsic, which activates the proper hit-or-miss shader. TraceRay can also be called inside hit-or-miss shaders, allowing for “multi-bounce” lighting effects.

The NVIDIA GameWorks Ray Tracing devkit provides tools and resources for using the Microsoft DXR API with NVIDIA RTX, including a ray-tracing denoiser module. With this technology available on an industry-standard platform like Microsoft, every PC game developer now has access to real-time ray tracing.

The Vulkan API is the third, and newest RTX API used to program real-time ray-tracing technology. It was launched at NVIDIA’s GPU Technology Conference by the non-profit Khronos Group in 2015. Khronos is focused on creating an API for software vendors' in-game, mobile, and workstation development.

Vulkan was initially referred to as the "next generation OpenGL initiative.” It’s being derived from AMD's Mantle API, which AMD donated to Khronos with the intent of providing a foundation for a low-level RTX API that could be standardized across the industry. NVIDIA’s Vulkan Ray Tracing Extensions will add hybrid ray-tracing and rasterization techniques to Vulkan in a cross-platform API.

Vulkan specifies shader programs and computes kernels, objects, and operations. It’s also a pipeline with programmable and fixed functions. Khronos intends the Vulkan API to have a variety of advantages such as batching to lower driver overhead, more direct control over the GPU, and reduced CPU usage, and better scaling on multi-core CPUs.

Rather than using the high-level language GLSL for writing shaders, Vulkan drivers ingest shaders already translated into an intermediate binary format. By allowing shader pre-compilation, application initialization is faster, and a greater variety of shaders can be used per scene. A Vulkan driver needs to do only GPU-specific optimization and code generation, resulting in easier driver maintenance.

The Vulkan API works well for advanced graphics cards as well as for graphics hardware on mobile devices. Similar to OpenGL, the Vulkan API is not locked to a single OS. Vulkan runs on Android, Linux, Tizen, Windows 7, Windows 8, and Windows 10. Freely licensed third-party support for iOS and MacOS is also available.

According to the Vulkan user tutorial, using Vulkan requires four prerequisites:

In order to begin developing Vulkan applications, creators need to access the SDK. It includes the headers, standard validation layers, debugging tools, and a loader for all the Vulkan functions. The loader looks up the functions in the driver at runtime.

Unlike DirectX 12, Vulkan doesn’t include a library for linear-algebra operations. Its open source tutorial instructs users to download GLM, a library designed for graphics APIs that is also frequently used with OpenGL.

Vulkan by itself also doesn’t include tools for displaying rendered results. Vulkan recommends developers use the GLFW library, which is supported by Windows, Linux, and MacOS, to create a window.

Graphics development in Vulkan proceeds with triangle drawing, vertex buffer creation, uniform buffer allocation, texture mapping, depth buffering, model loading, mipmap generation, and multisampling. Because Vulkan is a non-profit collaboration, open-source tutorials are available online for the interest of the general user.

As GPUs continue to grow even more powerful and APIs are developed to harness that power, NVIDIA, Microsoft and Khronos Group are leading the charge towards replacing rasterization with real-time ray tracing as the standard algorithm for 3D real-time rendering.

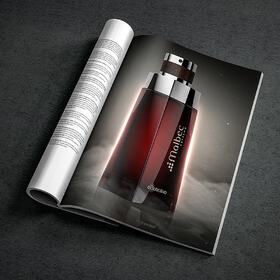

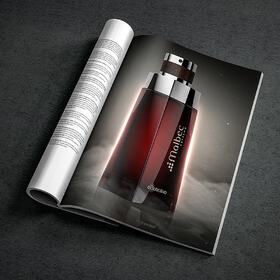

In addition to cinematic realism for applications like games, real-time ray tracing is being used more and more to enhance architectural applications, product design services, scientific visualizations, and more.

Right now, ray-tracing tools such as Autodesk Arnold, V-Ray from Chaos Group, Pixar’s Renderman, and NVIDIA’s latest GPU technology are being used by creators to generate photorealistic mockups and prototyping, model how light interacts with their designs, and, as GPUs offer even more computing power, create interactive entertainment that will be more and more cinematic in its scope.

The real-time, movie-quality rendering produced by RTX and its accompanying APIs is the culmination of over two decades of work by some of the world’s largest technology firms on computer graphics algorithms and GPU architecture.

By using a ray-tracing engine running on NVIDIA Turing and Volta GPUs programmed with OptiX, Microsoft DirectX Raytracing (DXR), or Vulkan, and with tools such as the GameWorks SDK, developers have an expanded capability to realize creative visions for their clients.

Over time, real-time ray-tracing will create more and more realistic reflections, shadows, and refractions to give interactive consumer applications all the hyper-real qualities of cinematic production. Although in the near-term, hybrid methods will continue to be contenders by combining real-time ray tracing with traditional rasterization techniques.

Despite real-time ray tracing being a relatively new technology in the rendering scene, Cad Crowd has freelancers who have already mastered the technology. They can work with you to create high-quality 3D models and renderings that are sure to make your video game or movie look top of the line. If you're interested in chatting with us, contact us for a free quote!